Proof (Theorem 1). Define the risk function \begin{aligned}

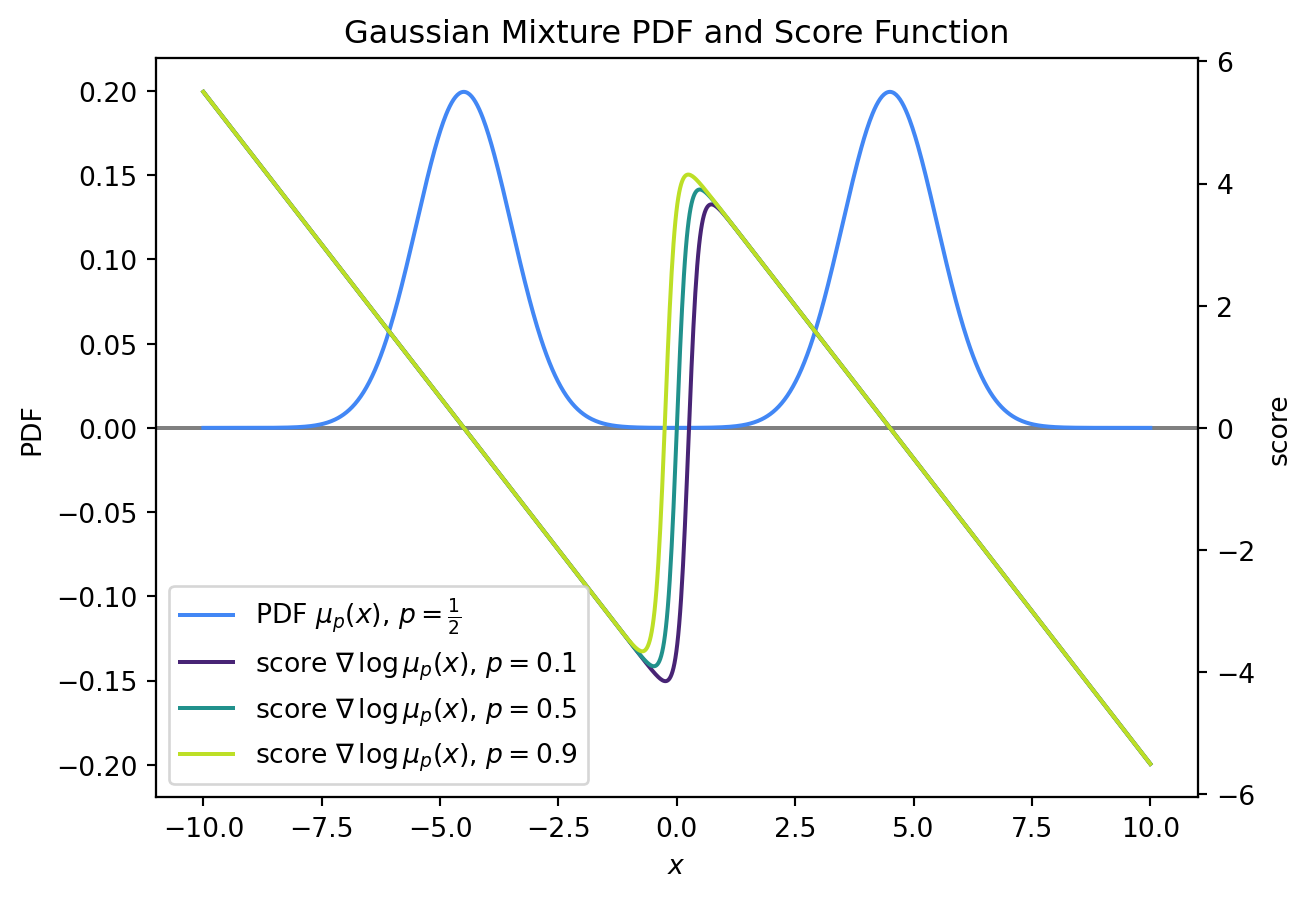

\varphi(p; x)

&=

\nabla^2 \log \mu_p (x) + \frac{1}{2}(\nabla \log \mu_p (x))^2,

\end{aligned} so that \begin{aligned}

\hat p_{\text{SME}}

= \mathop{\mathrm{arg min}}_{p \in [0, 1]}

\frac{1}{n}

\sum_{i=1}^{n}

\varphi(p, X_i)

.

\end{aligned} Furthermore, denote \begin{aligned}

\varphi_n(p) \overset{\mathrm{def}}{=}

\frac{1}{n}

\sum_{i=1}^{n}

\varphi(p, X_i).

\end{aligned} Now, since the SME is consistent, \hat p_{\text{SME}} will be close to p^\star as n \to \infty. This motivates performing a Taylor expansion of the objective function to obtain that \begin{aligned}

0

&=

\partial_p \varphi_n (\hat p_{\text{SME}})

\approx

\partial_p \varphi_n (p^\star)

+

\partial_p^2 \varphi_n (p^\star) (\hat p_{\text{SME}} - p^\star).

\end{aligned} Rearranging, we obtain that \begin{aligned}

\sqrt{n}(\hat p_{\text{SME}} - p^\star)

\approx \frac{\sqrt{n} \partial_p \varphi_n (p^\star)}{\partial_p^2 \varphi_n (p^\star)}.

\end{aligned} Let X \sim \mu_{p^\star}. As n \to \infty, the Central Limit Theorem tells us that \begin{aligned}

\sqrt{n} \partial_p \varphi_n (p^\star)

\overset{\text{D}}{\to} \mathcal N(0, \mathop{\mathrm{Var}}\left[ \partial_p \varphi(p; X) \right])

= \mathcal N(0, \mathbb E\left[ (\partial_p \varphi(p^\star; X))^2 \right]),

\end{aligned} where the last equality is due to the fact \partial_p \,\big\vert_{p = p^\star}\mathbb E[\varphi(p^\star; X)] = 0 due to asymptotic consistency. On the other hand, by the Law of Large Numbers, the denominator converges to \begin{aligned}

\partial_p^2 \varphi_n(p^\star) = \mathbb E\left[ \partial_p^2 \varphi(p^\star; X) \right].

\end{aligned} Thus, we have that \begin{aligned}

\sqrt{n}(\hat p - p^\star)

\overset{\text{D}}{\to}

\mathcal N\left( 0,

\frac{\mathbb E\left[ \left( \partial_p \varphi(p; X) \right)^2 \right]}{\mathbb E\left[ \partial_p^2 \varphi(p; X) \right]^2}

\right).

\end{aligned} In fact, we can simplify the denominator of the variance expression. We have that \begin{aligned}

\mathbb E\left[ \partial_p^2 \varphi(p; X) \right]

&=

\int \partial_p^2 \varphi(p; x) \,\mathrm{d}{\mu_p(x)}\\

&=

-\int \partial_p \varphi(p; x) (\partial_p \mu_p(x))\,\mathrm{d}{x}

\\

&=

-\int \partial_p \varphi(p; x) \left( \frac{\partial_p \mu_p(x)}{\mu_p(x)} \right)\,\mathrm{d}{\mu_p(x)}\\

&=

-\int (\partial_p \varphi(p; x)) (\partial_p \log \mu_p (x)) \,\mathrm{d}{\mu_p(x)},

\end{aligned} where the second equality is by integration by parts. Hence, \begin{aligned}

\mathbb E\left[ \partial_p^2 \varphi(p; X) \right]^2

&=

\mathbb E\left[ \partial_p \varphi(p; X) (\partial_p \log \mu_p (x)) \right]^2.

\end{aligned}

Computing the partial derivative of the risk function with respect to p, we obtain that \begin{aligned}

\partial_p \varphi(p; X)

&=

\partial_p \nabla^2 \log \mu_p (X)

+

(\nabla \log \mu_p(X)) (\partial_p \nabla \log \mu_p(X)).

\end{aligned}

Now, we provide a lower bound on the numerator and an upper bound on the denominator as follows.

Lower bound on numerator.

Using the calculations in Section 3.1, we compute that \begin{aligned}

\left\lvert \partial_p \varphi(p; X) \right\rvert

&=

\left\lvert

\partial_p \nabla^2 \log \mu_p (X)

+

(\nabla \log \mu_p(X)) (\partial_p \nabla \log \mu_p(X)) \right\rvert

\\

&=

\left\lvert

-\frac{16m^2}{(e^{mX} + e^{-mX})^2} \cdot \tanh(mX) + (-X + m \tanh(mX)) \cdot \frac{8m}{(e^{mX} + e^{-mX})^2}

\right\rvert

\\

&=

\frac{8m}{(e^{mX} + e^{-mX})^2}

\cdot

\left\lvert

X + m \cdot \tanh(mX)

\right\rvert.

\end{aligned} Consider when \left\lvert X \right\rvert \in [\frac{1}{2m}, \frac{1}{m}]. On this interval, we have that \begin{aligned}

\frac{\left\lvert

X + m \cdot \tanh(mX)

\right\rvert}{(e^{mX} + e^{-mX})^2}

&\geq

\frac{m \left\lvert \tanh(mX) \right\rvert - \left\lvert

X

\right\rvert}{(e^{mX} + e^{-mX})^2}

\\

&\geq

\frac{m \tanh (1) - \frac{1}{m}}{(e + e^{-1})^2}

\\

&\gtrsim

m,

\end{aligned} where the second inequality is a consequence of the triangle inequality. Thus, on this interval, we have that \begin{aligned}

\left\lvert \partial_p \varphi(p; X) \right\rvert

=

\frac{8m\left\lvert

X + m \cdot \tanh(mX)

\right\rvert}{(e^{mX} + e^{-mX})^2}

\gtrsim m^2.

\end{aligned} Hence, we obtain that \begin{aligned}

\mathbb E\left[ \left( \partial_p \varphi(p; X) \right)^2 \right]

&=

\mathbb E\left[

\left\lvert \partial_p \varphi(p; X) \right\rvert^2

\right]

\\

&\geq

\mathbb E\left[

\left\lvert \partial_p \varphi(p; X) \right\rvert^2

\mathbf 1_{\left\lvert X \right\rvert\in [\frac{1}{2m}, \frac{1}{m}]}

\right]

\\

&\gtrsim

\mathbb E\left[

m^4

\mathbf 1_{\left\lvert X \right\rvert\leq [\frac{1}{2m}, \frac{1}{m}]}

\right]

\\

&\gtrsim

m^4 e^{-\frac{1}{2}m^2}.

\end{aligned}

Upper bound on denominator.

First, using the work above, we have that \begin{aligned}

\left\lvert \partial_p \varphi(p; X) \right\rvert

&=

\frac{8m}{(e^{mX} + e^{-mX})^2}

\cdot

\left\lvert

X + m \cdot \tanh(mX)

\right\rvert

\\

&\leq

\frac{8m}{(e^{mX} + e^{-mX})^2}

\cdot

\left(

\left\lvert

X

\right\rvert + m

\right),

\end{aligned} by the triangle inequality. On the other hand, using Section 3.1, we compute that \begin{aligned}

\left\lvert \partial_p \log \mu_p (X) \right\rvert

&=

\left\lvert 2\tanh(mX) \right\rvert

\leq

2.

\end{aligned} Hence, we find that \begin{aligned}

\mathbb E\left[ (\partial_p \varphi(p; X)) (\partial_p \log \mu_p (X)) \right]

&\leq

\mathbb E\left[ \left\lvert \partial_p \varphi(p; X) \right\rvert \left\lvert \partial_p \log \mu_p (X) \right\rvert \right]

\\

&\leq

2

\mathbb E\left[ \left\lvert \partial_p \varphi(p; X) \right\rvert \right]

\\

&\lesssim

\mathbb E\left[

\frac{m}{(e^{mX} + e^{-mX})^2}

\cdot

\left(

\left\lvert

X

\right\rvert + m

\right)

\right]

\\

&\lesssim

m

\int_{0}^{\infty}

\frac{x + m}{(e^{mx} + e^{-mx})^2}

\cdot

e^{-\frac{1}{2}(x - m)^2}

\,\mathrm{d}{x}

\\

&\leq

m

\int_{0}^{\infty}

\left(

x

+ m

\right)

e^{-\frac{1}{2}(x - m)^2-2mx}

\,\mathrm{d}{x}

\\

&\lesssim

me^{-\frac{1}{2}m^2},

\end{aligned} where the last equality is a consequence of Mills’ inequality (a tail bound on the Gaussian CDF).

Combining the upper and lower bounds, we find that the asymptotic variance of the score matching estimator is \begin{aligned}

\frac{\mathbb E\left[ \left( \partial_p \varphi(p; Y, Z) \right)^2 \right]}{\mathbb E\left[ \partial_p^2 \varphi(p; Y, Z) \right]^2}

&\gtrsim

\frac{m^4 e^{-\frac{1}{2}m^2}}{\left( me^{-\frac{1}{2}m^2} \right)^2}

\gtrsim

m^2 e^{\frac{1}{2}m^2}.

\end{aligned} This shows that the asymptotic variance grows as \Omega\left( m^2 e^{\frac{1}{2}m^2} \right), proving our desired result. Note that this is unbounded as a function of m!

On the other hand, let \ell(p; X) \overset{\mathrm{def}}{=}\log \mu_p(X), and let \ell'(p; X) \overset{\mathrm{def}}{=}\partial_p \ell(p; X). It is well-known that \begin{aligned}

\sqrt{n}(\hat p_{\text{MLE}} - p^\star)

\overset{\text{D}}{\to}

\mathcal N\left( 0, \frac{1}{\mathbb E[\ell'(p^\star; X)^2]} \right).

\end{aligned} Meanwhile, we compute that \begin{aligned}

\mathbb{E}[\ell'(p^\star; X)^2] &= \int \frac{(\mu_+(X) - \mu_-(X))^2}{\mu_p(X)}

\\

&=

2\int \frac{(\mu_+(X) - \mu_-(X))^2}{\mu_+(X) + \mu_-(X)}\\

&=

2\left(

\int \frac{(\mu_+(X) + \mu_-(X))^2 - 4 \mu_+(X)\mu_-(X)}{\mu_+(X) + \mu_-(X)}

\right) \\

&\geq

4\left( 1-2\int \frac{\mu_+(X)\mu_-(X)}{\mu_+(X)+\mu_-(X)} \right) \\

&\geq

4\left( 1-\int_+ \frac{\mu_+(X)\mu_-(X)}{\mu_+(X)} - \int_- \frac{\mu_+(X)\mu_-(X)}{\mu_-(X)} \right) \\

&\geq

4\left( 1-2\mathbb{P}(X \geq m) \right) \\

&\geq

4\left( 1 - \frac{1}{\sqrt{1 + m^2}}\exp\left( -\frac{m^2}{2} \right) \right),

\end{aligned} where we have used Mills’ inequality to lower bound the Gaussian CDF. Hence, we obtain that the rescaled asymptotic variance of the MLE is bounded by \begin{aligned}

\sigma^2_{\text{MLE}}(m)

&= \frac{1}{\mathbb E[\ell'(p^\star; X)^2]} \leq

\frac{1}{

4\left( 1 - \frac{e^{-\frac{1}{2}m^2}}{\sqrt{1+m^2}} \right)

},

\end{aligned} as desired.

Having shown both claims in the theorem, we conclude our proof. ◻